2020 IEEE Low-Power Computer Vision Challenge

LPCVC 2020 Overview

The 2020 CVPR Workshop of Low-Power Computer Vision Challenge was succesfully hosted on 15 June 2020 in Seattle, USA. This workshop featured the winners of the past Low-Power Image Recognition Challenges, invited speakers from academia and industry, as well as presentations of accepted papers.

The LPCVC featured 3 tracks sponsored by Facebook, Xilinx, and Google was be hosted online from 7/1/2020 to 7/31/2020. More details are posted below. Please join the online discussion forum lpcvc.slack.com.

The 2020 IEEE Low-Power Computer Vision Challenge (LPCVC) concluded successfully on 2020/07/31, after a month of competition. Forty six teams submitted 378 solutions in four tracks. During the month-long challenge, many teams submitted multiple solutions and witnessed the scores becoming much higher. The 2020 LPCVC adds a video track and expands the Low-Power Image Recognition Challenge (LPIRC) started in 2015.

Tracks

For more information, go to the competition introduction page. To see a detailed score breakdown for each submission, go to the leaderboard.

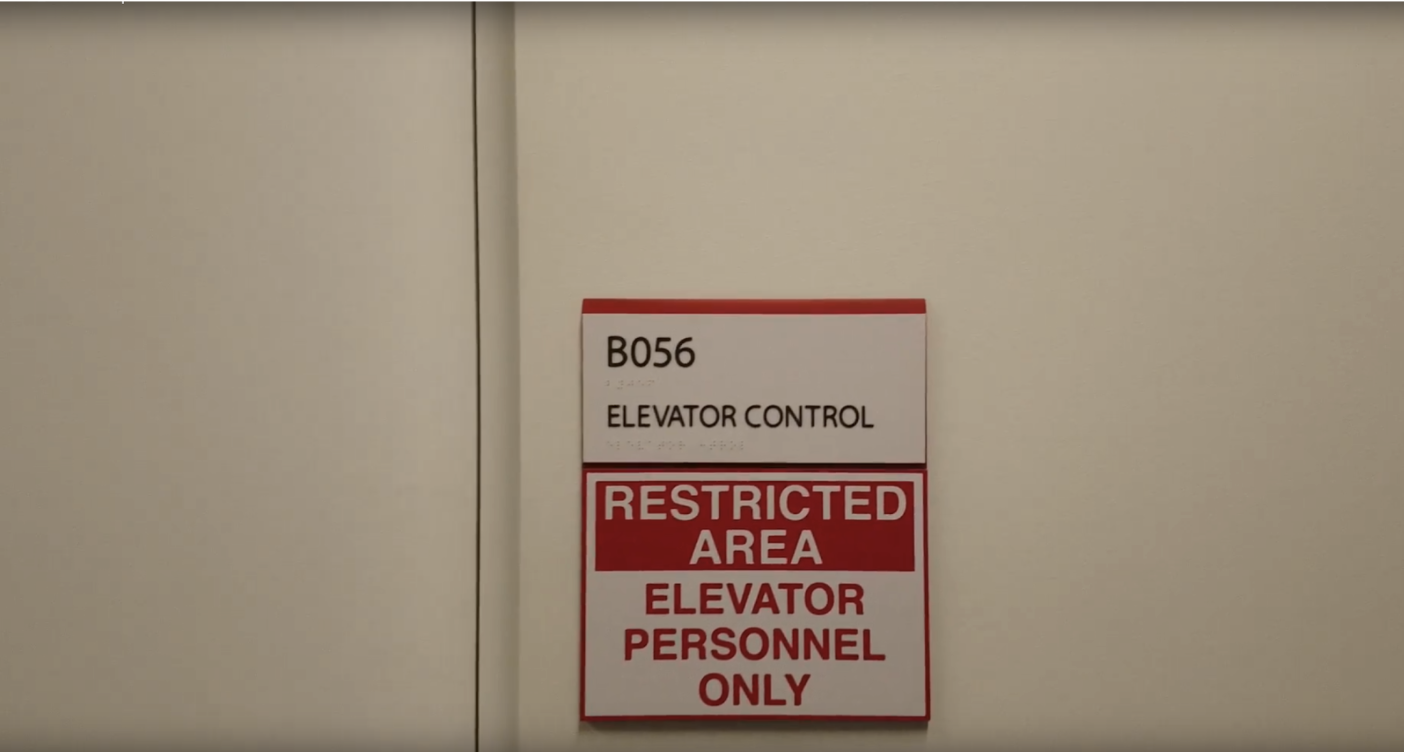

PyTorch UAV Video Track

This track requires that participating teams recognize English letters or numbers in the signs in the video captured by an unmanned aerial vehicle (UAV). The following two video frames show examples of such a challenge. The challenge appears in the form of question-answer pairs. A successful solution has to answer the English letters or numbers appearing in the same frame as the questions. For example, if the question is “RESTRICTED AREA”, the answer should be “B056 ELEVATOR CONTROL ELEVATOR PERSONNEL ONLY”. If the question is “CONFERENCE”, the answer should be “122”. The solutions are case insensitive. The solutions must use PyTorch running on Raspberry Pi 3B+.

Eleven teams submitted 84 solutions to this track. The winners of this tracks are:

| Prize | Team | Organization | Member | Representative |

|---|---|---|---|---|

| First | LPNet | ByteDance Inc |

| Xuefeng Xiao (xiaoxuefeng.ailab@bytedance.com) |

| Second | TAMU-KWAI | Texas A & M University and Kwai Inc |

| Zhenyu Wu (wuzhenyu_sjtu@tamu.edu) |

| Third | C408 | Stony Brook University (SUNY Korea) |

| Seonghwan Jeong (seonghwan.jeong@stonybrook.edu) |

FPGA Image Track

This track uses Ultra96-V2 + Xilinx® Deep Learning Processor Unit (DPU) running software PYNQ + Vitis AI. The challenge recognizes objects in images. Nineteen teams submitted 115 solutions. The winners of this track are:

| Prize | Team | Organization | Member | Representative |

|---|---|---|---|---|

| First | OnceForAll | MIT HAN Lab |

| Zhekai Zhang ((zhangzk@mit.edu)) |

| Second | MAXX | National Chiao Tung University, Taiwan |

| Xuan-Hong Li (nonelee.ee05@g2.nctu.edu.tw) |

| Third | Water | SKLCA, Institute of Computing Technology, CAS |

| Shengwen Liang (liangshengwen@ict.ac.cn) |

OVIC Tensorflow Track

The OVIC Tensorflow track has three different challenges:- Interactive object detection: This category focuses on COCO detection models operating at 30 ms / image on a Pixel 4 smartphone (CPU).

- Real-time image classification on Pixel 4: This category focuses on Imagenet classification models operating at 10 ms / image on a Pixel 4 smartphone (CPU).

- Real-time image classification on LG G8: This category focuses on Imagenet classification models operating at 7 ms / image on a LG G8 smartphone (DSP).

Sixteen teams submitted 179 solutions to these tracks. The winners of these tracks are:

Interactive Object Detection

| Prize | Team | Organization | Member | Representative |

|---|---|---|---|---|

| First | OnceForAll | MIT HAN Lab |

| Yubei Chen (yubeic@berkeley.edu) |

| Second | SjtuBicasl | Shanghai Jiao Tong University |

| Xinzi Xu (xu_xinzi@sjtu.edu.cn) |

| Third | Novauto | Novauto Inc |

| Changcheng Tang (angcc1127@gmail.com) |

Real-time Image Classification on Pixel 4

| Prize | Team | Organization | Member | Representative |

|---|---|---|---|---|

| First | BAIDU&THU | Baidu Inc & Tsinghua University |

| Zerun Wang (wangzrthu@gmail.com) |

| Second | imchinfei | Beihang University |

| Fei Qin (64242200@qq.com) |

| Third | LPNet | ByteDance Inc |

| Xuefeng Xiao (xiaoxuefeng.ailab@bytedance.com) |

Real-time Image Classification on LG G8

| Prize | Team | Organization | Member | Representative |

|---|---|---|---|---|

| First | LPNet | ByteDance Inc |

| Xuefeng Xiao (xiaoxuefeng.ailab@bytedance.com) |

| Second | imchinfei | Beihang University |

| Fei Qin (64242200@qq.com) |

| Third (Tie) | OnceForAll | MIT HAN Lab |

| Yubei Chen (yubeic@berkeley.ed) |

| Third (Tie) | FoxPanda | National Chiao Tung University and National Tsing Hua University. |

| jacoblau.cs08g@nctu.edu.tw (jacoblau.cs08g@nctu.edu.tw) |

LPCVC started in 2015 with the aim to identify the technologies for computer vision using energy efficiently. IEEE Rebooting Computing has been the primary financial sponsor and provides administrative support. A new IEEE technical committee has been created to coordinate future activities of low-power computer vision. People interested in joining the committee please contact Terence Martinez, Program Director Future Directions IEEE Technical Activities, t.c.martinez@ieee.org.

Sponsors

Program Recordings

| Time | Speaker |

|---|---|

| 06:00 | Organizers and Sponsors’ Welcome

|

| 06:05 | History of Low-Power Computer Vision Challenge, Terence Martinez, IEEE |

| 06:10 | Paper Presentations:

|

| 07:00 |

Poster Presentations:

|

| 07:10 | QA, Moderator: Ming-Ching Chang, SUNY Albany |

| 07:30 | Invited Speeches:

|

| 08:15 | QA, Moderator: Carole-Jean Wu, Facebook |

| 08:30 | Invited Speeches:

|

| 09:15 | QA, Moderator: Jaeyoun Kim, Google |

| 09:30 | Invited Speech (Tools):

|

| 10:15 | QA, Moderator: Joe Spisak, Facebook |

| 10:30 | Panel: How can you build startups using low-power computer vision? Moderator: Song Han, MIT Pnaelists:

|

| 11:30 | Adjourn |

Organizers

| Name | Role and Organization | |

|---|---|---|

| Hartwig Adam | hadam@google.com | |

| Mohamed M. Sabry Aly | IEEE Council on Design Automation, Nanyang Technolgical University | msabry@ntu.edu.sg |

| Alexander C Berg | University of North Carolina | aberg@cs.unc.edu |

| Ming-Ching Chang | University at Albany - SUNY | mchang2@albany.edu |

| Bo Chen | bochen@google.com | |

| Shu-Ching Chen | Florida International University | chens@cs.fiu.edu |

| Yen-Kuang Chen | IEEE Circuits and Systems Society, Alibaba | y.k.chen@ieee.org |

| Yiran Chen | ACM Speical Interest Group on Design Automation, Duke University | yiran.chen@duke.edu |

| Chia-Ming Cheng | Mediatek | cm.cheng@mediatek.com |

| Bohyung Han | Seoul National University | bhhan@snu.ac.kr |

| Andrew Howard | howarda@google.com | |

| Xiao Hu | manage lpcv.ai website, Purdue student | hu440@purdue.edu |

| Christian Keller | chk@fb.com | |

| Jaeyoun Kim | jaeyounkim@google.com | |

| Mark Liao | Academia Sinica, Taiwan | liao@iis.sinica.edu.tw |

| Tsung-Han Lin | Mediatek | Tsung-Han.Lin@mediatek.com |

| (contact) Yung-Hsiang Lu | Chair, Purdue | yunglu@purdue.edu |

| An Luo | Amazon | anluo@amazon.com |

| Terence Martinez | IEEE Future Directions | t.c.martinez@ieee.org |

| Ryan McBee | Southwest Research Institute | ryan.mcbee@swri.org |

| Andreas Moshovos | University of Toronto/Vector Institute | moshovos@ece.utoronto.ca |

| Naveen Purushotham | Xilinx Inc. | npurusho@xilinx.com |

| Tim Rogers | Purdue | timrogers@purdue.edu |

| Mei-Ling Shyu | IEEE Multimedia Technical Committee, Miami University | shyu@miami.edu |

| Ashish Sirasao | Xilinx Inc. | asirasa@xilinx.com |

| Joseph Spisak | jspisak@fb.com | |

| George K. Thiruvathukal | Loyola University Chiicago | gkt@cs.luc.edu |

| Yueh-Hua Wu | Academia Sinica, Taiwan | kriswu@iis.sinica.edu.tw |

| Junsong Yuan | University at Buffalo | jsyuan@buffalo.edu |

| Anirudh Vegesana | manage lpcv.ai website, Purdue student | avegesan@purdue.edu |

| Carole-Jean Wu | carolejeanwu@fb.com |

FAQ

- Q: Where can I sign the agreement form?

A: You should sign the agreement form when submitting your solution to any track. Each account only needs to sign it once. The agreement form is a legal binding document, so please read it carefully.

- Q: How can we register as a team?

A: One team only needs one registered account. You can indicate your team members when signing the agreement form.

- Q: How can I register for a specific track?

A: Registered accounts are not limited to attend any track. Accounts will be considered as participating a track when submitting a solution.