Low-Power Computer Vision Workshop at ICCV 2019

2019 ICCV Workshop

Seoul, Republic of Korea

Monday, 28 October 2019

The 2019 IEEE Workshop on Low-Power Computer Vision concluded successfully on 28 October 2019 in Seoul. This workshop was co-located with the International Conference on Computer Vision.

This workshop features the winners of the past Low-Power Image Recognition Challenges, invited speakers from academia and industry, as well as presentations of accepted papers. Two tracks of on-site competitions were held. Google and Xilinx are the technical and financial sponsors of the on-site competitions.

In the FPGA (Field Programmable Gate Arrays) Track, the winners are:

| Rank | Team Name | Members | Emails |

|---|---|---|---|

| 1 | IPIU | Lian Yanchao, Jia Meixia, Gao Yanjie | xuliu@xidian.edu.cn |

| 2 | SkrSkr | Weixiong Jiang, Yingjie Zhang, Yajun Ha | jiangwx@shanghaitech.edu.cn |

| 3 | XJTU-Tripler | Boran Zhao, Pengchen Zong, Wenzhe Zhao | boranzhao@stu.xjtu.edu.cn pengjuren@xjtu.edu.cn |

In the DSP (Digital Signal Processing) Track, the winners are:

| Rank | Team Name | Members | Emails |

|---|---|---|---|

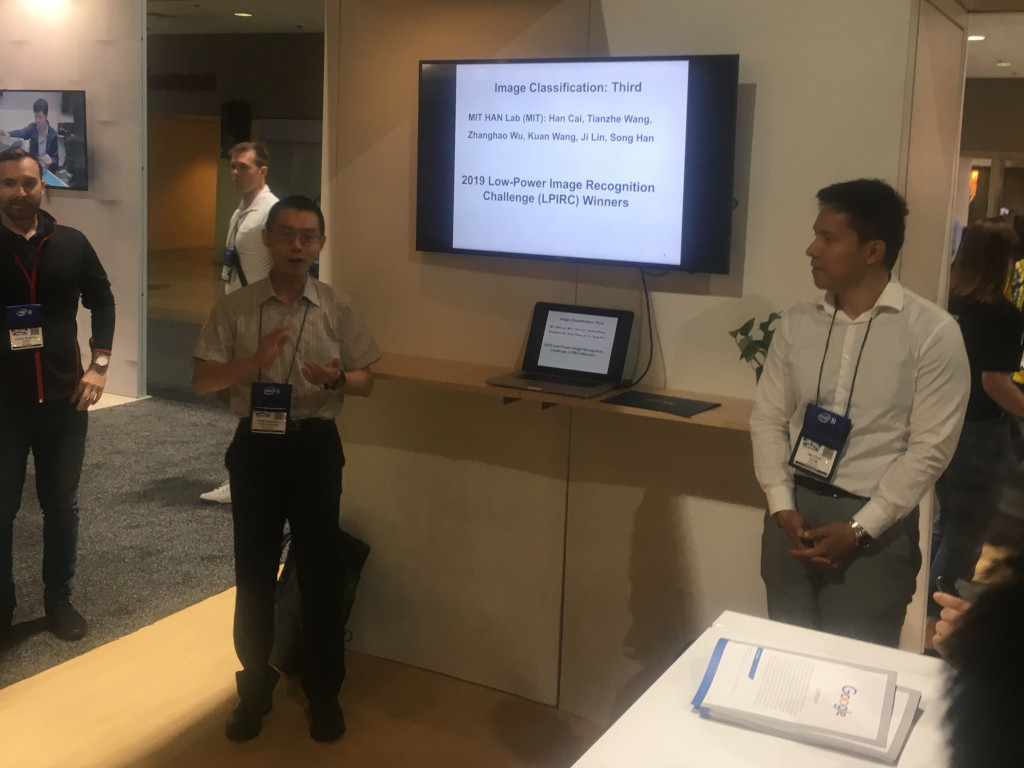

| 1 | MIT HAN Lab | Tianzhe Wang, Han Cai, Zhekai Zhang, Song Han | usedtobe@mit.edu hancai@mit.edu zhangzk@mit.edu songhan@mit.edu |

| 2 | ForeverDuke | Hsin-Pai Cheng, Tunhou Zhang, Shiyu Li | dave.cheng@duke.edu tunhou.zhang@duke.edu shiyu.li@duke.edu |

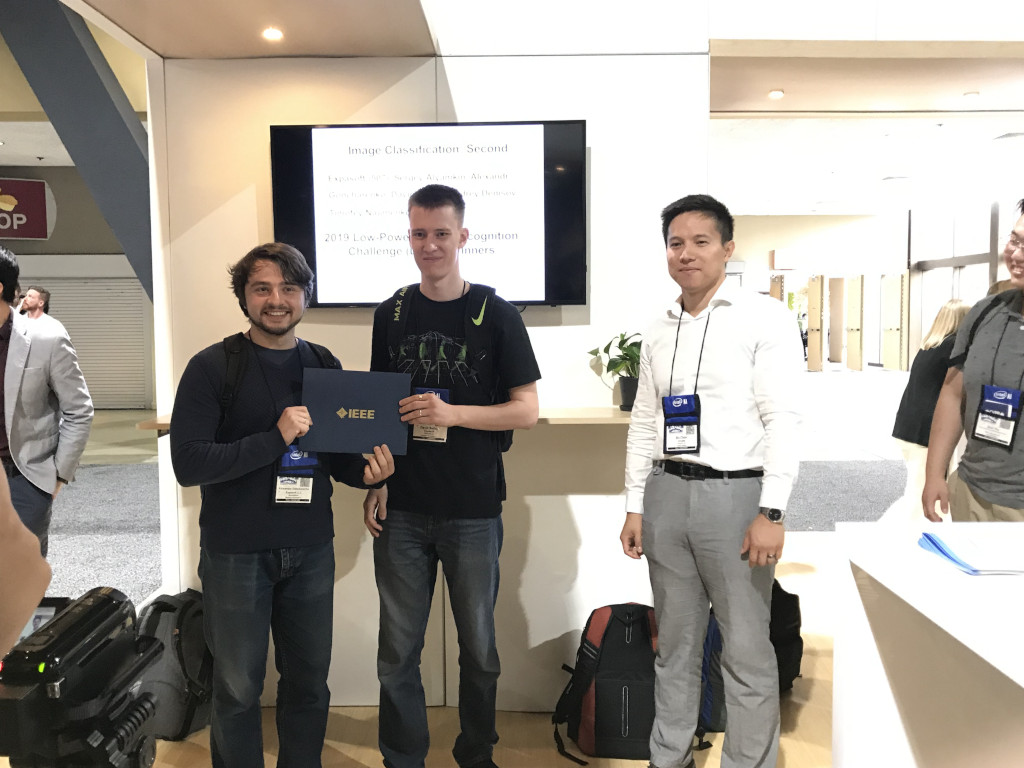

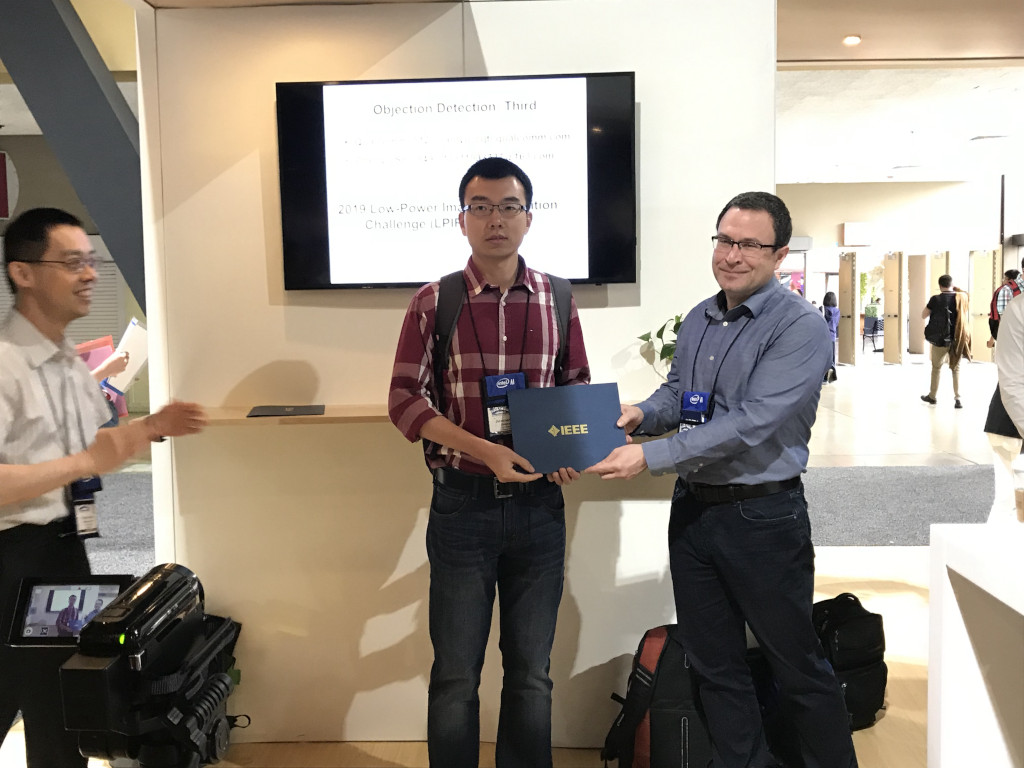

2019 ICCV Workshop Gallery

Program for 2019 ICCV Workshop “Low-Power Computer Vision”

28 October 2019, Seoul

| Time | Speaker |

|---|---|

| 08:30 | Organizers’ Welcome Yung-Hsiang Lu <yunglu@purdue.edu> and Terence Martinez <t.c.martinez@ieee.org> |

| 08:40 | Tensorflow Online Competition 2018-2019, Bo Chen <bochen@google.com> |

| 08:50 | 2018 LPIRC Winners, Moderator: Peter Vajda <vajdap@fb.com>

|

| 09:30 | 2019 LPIRC Winners, Moderator: Soonhoi Ha <sha@snu.ac.kr>

|

| 10:10 | Break |

| 10:40 | 2018 and 2019 LPIRC Winner, Moderator: Bo Chen <bochen@google.com> Award-winning Methods for Object Detection Challenge at LPIRC 2019, Tao Sheng <tsheng@amazon.com>, Peng Lei <leipeng@amazon.com>, and Yang Liu <yangliu@lab126.com> |

| 11:10 | New Perspectives, Moderator: Tao Sheng <tsheng@amazon.com>

|

| 12:40 | Break |

| 13:40 | Paper Presentations, Moderator: Jaeyoun Kim <jaeyounkim@google.com>

|

| 15:00 | Break |

| 15:20 | Paper Presentations, Moderator: Hsin-Pai Cheng <dave.cheng@duke.edu>

|

| 16:40 | Break |

| 16:50 | On-Site AI Acceleration Competition (by invitation only) Organizers: Google and Xilinx |

| 17:30 | Adjourn |

Abstracts

08:50-09:10

Title: Algorithm-Software-Hardware Codesign for CNN Acceleration

Speaker: Soonhoi Ha <sha@snu.ac.kr>

Abstract: There are three approaches to improve the energy efficiency of computer vision algorithms based on convolution networks (CNN). One is to find an algorithm that gives better accuracy than the other known algorithms under the constraints of real-time performance and available resources. The second is to develop a new hardware accelerator with higher performance per watt than GPUs that are widely used. Lastly, we can apply various software optimization techniques such as network pruning, quantization, and low rank approximation for a given network and the hardware platform. While those approaches have been researched separately, algorithm-software-hardware codesign will give the better result than any one alone. In this talk, I will present an effort that has been made in our laboratory. We have developed a novel CNN accelerator, based on the hardware-software codesign methodology where CNN compiler has been developed on a simulator without hardware implementation. We are developing an NAS (Neural architecture search) framework for the CNN accelerator.

Biography: Soonhoi Ha a professor and the chair in the department of Computer Science and Engineering at Seoul National University. He received the Ph.D. degree in EECS from U. C. Berkeley in 1992. His current research interests include HW/SW codesign of embedded systems, embedded machine learning, and IoT. He is an IEEE fellow.

09:10-09:30

Title: The winning solution of LPIRC 2018 track3 and its applications to real world

Speaker: Suwoong Lee <suwoong@etri.re.kr> and Bayanmunkh Odgerel <baynaamn7@kpst.co.kr>

Abstract: In this talk, we review the winning solution of LPIRC 2018 track3 and its practical applications. In the first part, detailed procedures for finding the winning solution are presented. We also present our approaches for improving the winning solution after LPIRC 2018 challenge. In the second part, several practical applications using our low-power image recognition techniques are introduced. Applications are reviewed in three aspects: software, hardware, and platforms. Finally, we conclude our talk by presenting future research directions.

Biography: Suwoong Lee is senior researcher at Electronics and Telecommunications Research Institute (ETRI). He received M.S at KAIST, and joined the creative contents research division at ETRI in 2007. He has researched various image understanding technologies and developed related products, before and after the deep learning era. His current research interest is lightweight object recognition technologies using deep learning.

The project’s contributors include Suwoong Lee <suwoong@etri.re.kr>, Bayanmunkh Odgerel <aynaamn7@kpst.co.kr>, Seungjae Lee <seungjlee@etri.re.kr>, Junhyuk Lee <jun@kpst.co.kr>, Hong Hanh Nguyen <honghanh@kpst.co.kr>, Jong Gook Ko <jgko@etri.re.kr>.

Seungjae Lee is a principal researcher at the Electronics and Telecommunications Research Institute(ETRI). He has researched content identification, classification, and retrieval systems. Junhyuk Lee is CEO at Korea Platform Service Technologies (KPST). He founded KPST in 2008, and currently, he is leading deep learning and mobile edge computing R&D team at KPST. Hong Hanh Nguyen is currently working as a senior member of the R&D team of KPST. Her current research is focusing on object recognition solutions using deep learning on embedded devices. Jong Gook Ko is a principal researcher at ETRI. He joined the security research division at ETRI in 2000. His current research interest is object recognition technologies using deep learning. ETRI & KPST team participated in visual searching related challenges such as ImageNet challenge (classification and localization: 5th place in 2016, detection: 3rd place in 2017), Google Landmark Retrieval (8th place in 2018) and Low Power Image Recognition Challenge (1st place in 2018).

09:30-09:50

Title: Winning Solution on LPIRC-2019 Competition

Speaker: Hensen Chen <hesen.chs@alibaba-inc.com>

Abstract: With the development of computer vision technology in recent years, more and more mobile/IoT devices rely on visual data for making decisions. The increasing industrial demands to deploy deep neural networks on resource constrained mobile device motivates recent research of efficient structure for deep learning. In this work, we designed a hardware-friendly deep neural network structure based on the characteristics of mobile devices. Combined with advanced training programs, our mode archive 8-bit inference top-1 accuracy in 66.93% on imagenet validation dataset, with only 23ms inference speed on Google Pixel2.

Abstract: Hensen is an algorithm engineer at Alibaba Group Inc. Hensen received Master degree in electronic engineering from Beijing University of Posts and Telecommunications in 2018 under the supervision of Prof. Jingyu Wang. Hensen’s research interest mainly focuses on model compression and efficient convolutional network.

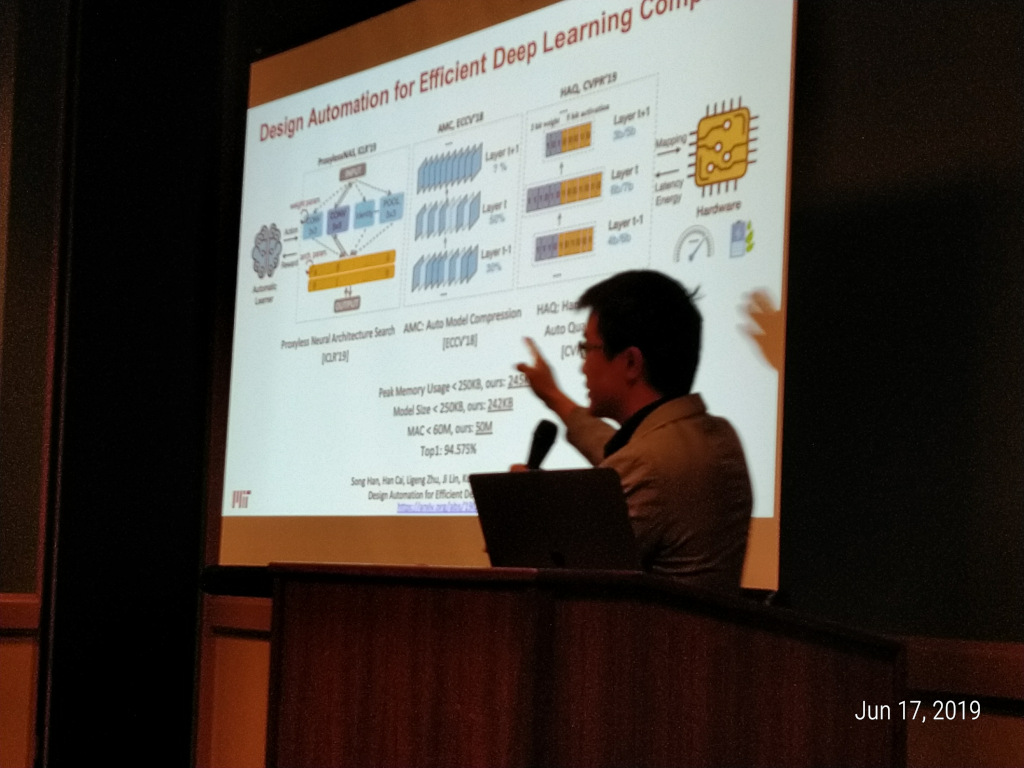

09:50-10:10

Title: On-Device Image Classification with Proxyless Neural Architecture Search and Quantization-Aware Fine-tuning

Speaker: Ji Lin <jilin@mit.edu>

Abstract: It is challenging to efficiently deploy deep learning models on resource-constrained hardware devices (e.g., mobile and IoT devices) with strict efficiency constraints (e.g., latency, energy consumption). We employ Proxyless Neural Architecture Search (ProxylessNAS) to auto design compact and specialized neural network architectures for the target hardware platform. ProxylessNAS makes latency differentiable, so we can optimize not only accuracy but also latency by gradient descent. Such direct optimization saves the search cost by 200× compared to conventional neural architecture search methods. Our work is followed by quantization-aware fine-tuning to further boost efficiency. In the Low Power Image Recognition Competition at CVPR 19, our solution won the 3rd place on the task of Real-Time Image Classification (online track).

Biography: Ji is currently a first-year Ph.D. student at MIT EECS. Previously, He graduated from the Department of Electronic Engineering, Tsinghua University. His research interests lie in efficient and hardware-friendly machine learning and its applications

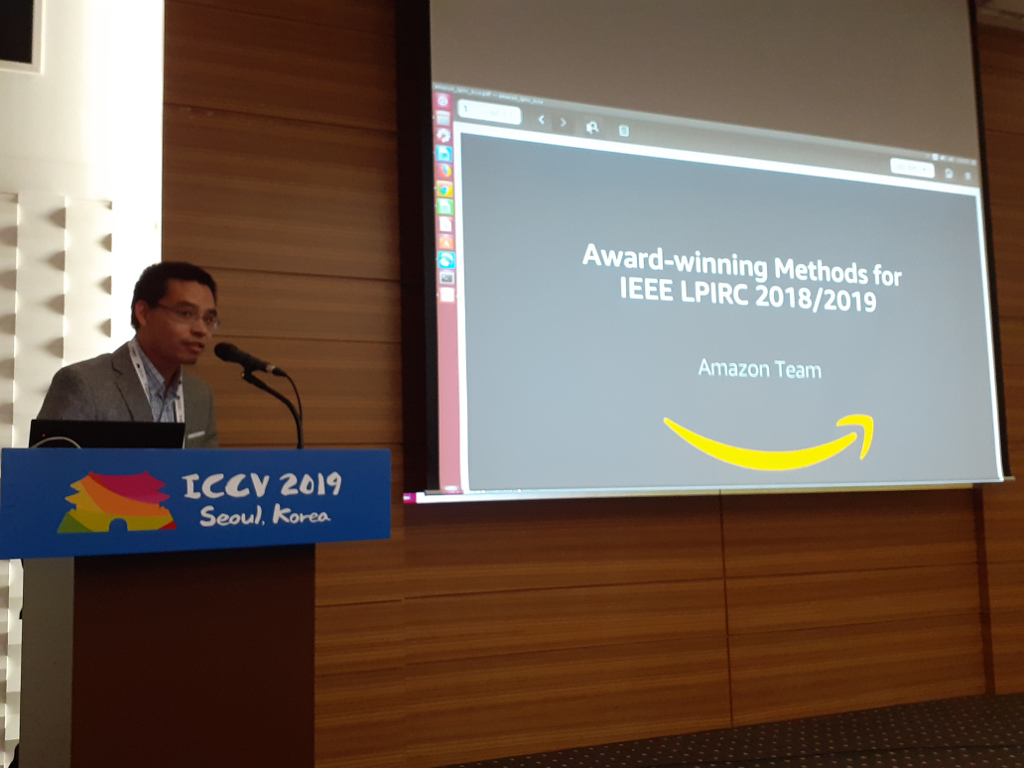

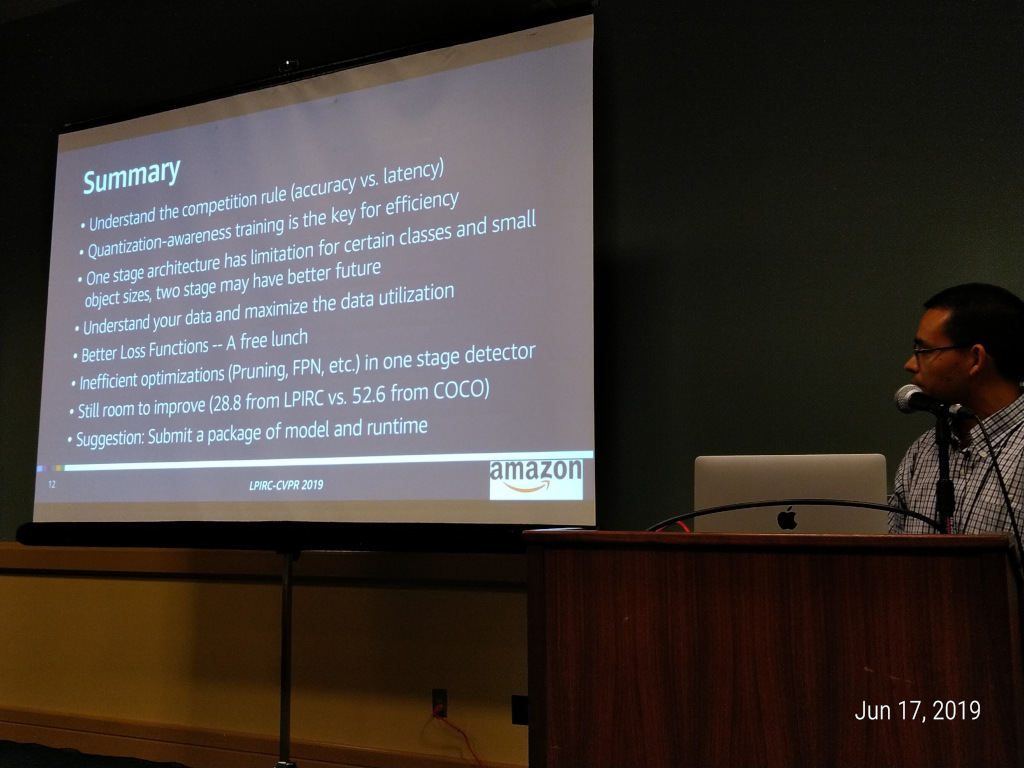

10:40-11:10

Title: Award-winning Methods for Object Detection Challenge at LPIRC 2019

Speaker: Tao Sheng <tsheng@amazon.com>, Peng Lei <leipeng@amazon.com>

Abstract: The LPIRC is an annual competition for the best technologies in image classification and object detection measured by both efficiency and accuracy. As the winners were announced at and LPIRC-II 2018 and LPIRC-CVPR 2019, our Amazon team has won the top prizes for object detection challenge and image classification challenge. We would like to share our award-winning methods in this talk, which can be summarized as five major steps. First, 8-bit quantization friendly model is one of the key winning points to achieve the fast execution time while maintaining the high accuracy on edge devices. Second, network architecture optimization is another winning keypoint. We optimized the network architecture to meet the latency requirement on Pixel2 phone. The third one is dataset filtering. We removed the images with small objects from the training dataset after thoroughly analyzing the training curves, which significantly improved the overall accuracy. The forth one is the loss function optimization. And the fifth one is non-maximum suppression optimization. By combining all the above steps, our final solutions were able to win the 1st prize of object detection challenge in the two consecutive LPIRC.

Biography: Dr. Tao Sheng is a Senior Deep Learning Engineer at Amazon. He has been working on multiple cutting-edge projects in the research areas of computer vision, machine learning in more than 10 years. Prior to Amazon, he worked with Qualcomm and Intel. He has strong interests in deep learning for mobile vision, edge AI, etc. He has published ten US and International patents and night papers. Most recently, he led the team to win the Top Prizes of IEEE International Low-Power Image Recognition Challenge at LPIRC-I 2018, LPIRC-II 2018, and LPIRC 2019. Dr. Peng Lei is currently an Applied Scientist at Amazon.com Services, Inc. His role is to apply advanced Computer Vision and Machine Learning techniques to build solutions to challenging problems that directly impact the company's product. He is an experienced researcher with a demonstrated history of working in both academia and industry. His research interests include object detection, person re-identification, temporal action segmentation, semantic video segmentation and motion estimation in videos. He received a Ph.D. degree from Oregon State University in 2018 with a successful record of publications including 2 CVPRs, 2 ECCVs, 1 ICCV, 2 ICPRs and 1 CVPR Workshop. He won 1st place in Low-Power Image Recognition Challenge 2019 and 4th place in DAVIS Video Segmentation Challenge 2017, and served as a reviewer for CVPR 2019, ICCV 2019 and AAAI 2020.

11:10-11:40

Title: Value-Based Deep Learning Hardware Acceleration

Speaker: Andreas Moshovos <moshovos@eecg.toronto.edu>

Abstract: I will be reviewing our efforts in identifying value properties of Deep Learning models that hardware accelerators can use to improve execution time performance, memory traffic and storage, and energy efficiency. Our goal it to not sacrifice accuracy and to not require any changes to the model. I will be presenting our accelerator family which includes designs that exploit these properties. Our accelerators exploit ineffectual activations and weights, their variable precision requirements, or even their value content at the bit level. Further, our accelerators also enable on-the-fly trading off accuracy for further performance and energy efficiency improvements. I will emphasize our work on accelerators for machine learning implementations of computational imaging. Finally, I will overview NSERC COHESA, a Canadian research network which targets the co-design of next generation machine learning hardware and algorithms.

Biography: Dr.Moshovos teaches at the Department of Electrical and Computer Engineering at the University of Toronto. He taught at the Northwestern University, USA, the University of Athens, Greece, the Hellenic Open University, Greece, and as a invited professor at the École polytechnique fédérale de Lausanne, Switzerland. He received a Bachelor and a Master Degree from the University of Crete, Greece and a Ph.D. from the University of Wisconsin-Madison.

His research interests lie primarily in the design of performance-, energy-, and/or cost-optimized computing engines for various applications domains. Most work thus far has been on high-performance general-purpose systems.My current work emphasizes highly-specialized computing engines for Deep Learning. He will also be serving as the Director of the newly formed National Sciences and Engineering Research Council Strategic Partnership Network on Machine Learning Hardware Acceleration (NSERC COHESA), a partnership of 19 Researchers across 7 Universities involving 8 Industrial Partners. He has been awarded the ACM SIGARCH Maurice Wilkes mid-career award, a National Research Foundation CAREER Award, two IBM Faculty Partnership awards, a Semiconductor Research Innovation award, an IEEE Top Picks in Computer Architecture Research, and a MICRO conference Hall of Fame award. He has served as the Program Chair for the ACM/IEEE International Symposium on Microarchitecture and the ACM/IEEE International Symposium on Performance Analysis of Systems and Software. He is a Fellow of the ACM and a Faculty Affiliate of the Vector Institute.

11:40-12:10

Title: Ultra-low Power Always-On Computer Vision

Speaker: Edwin Park <epark@qti.qualcomm.com>

Abstract: The challenge of on device battery power computer vision is to process computer vision at ultra low power. In tis talk, we will discuss computer vision looking at the entire pipeline from sensor to results.

Biography: Edwin Park is a Principal Engineer with Qualcomm Research since 2011. He is directly responsible for leading the system activities surrounding the Always-on Computer Vision Module (CVM) project. In this role, he leads his team in developing the architecture, creating the algorithms, and producing models so devices can “see” at the lowest power. Featuring an extraordinarily small form factor, Edwin has designed the CVM to be integrated into a wide variety of battery-powered and line-powered devices, performing object detection, feature recognition, change/motion detection, and other applications.

Since joining Qualcomm Research, Edwin has worked on a variety of projects such as making software modifications to Qualcomm radios, enabling them to support different radio standards. Edwin has also focused on facilitating carriers’ efforts worldwide to migrate from their legacy 2G cellular systems to 3G, allowing them to increase their data capacity. Edwin Park’s work at Qualcomm Research has resulted in over 40 patents.

Prior to joining Qualcomm, Park was a founder at AirHop Communications and Vie Wireless Technologies. Edwin had various engineering and management positions at Texas Instruments and Dot Wireless. He also worked at various other startups including ViaSat and Nextwave.

Park received a Master Electrical Engineering from Rice University, Houston, TX, USA and a BSEE specializing in Physical Electronics and BA in Economics also from Rice University.

12:10-12:40

Title: Hardware-Aware Deep Neural Architecture Search

Speaker: Peter Vajda <vajdap@fb.com>

Abstract: A central problem in the deployment of deep neural networks is maximizing accuracy within the compute performance constraints of low power devices. In this talk, we will discuss approaches to addressing this challenge based automated network search and adaptation algorithms. These algorithms not only discover neural network models that surpass state-of-the-art accuracy, but are also able to adapt models to achieve efficient implementation on low power platforms for real-world applications.

Biography: Peter Vajda is a Research Manager leading the Mobile Vision efforts at Facebook. Before joining Facebook, he was a Visiting Assistant Professor in Professor Bernd Girod’s group at Stanford University, Stanford, USA. He was working on personalized multimedia system and mobile visual search. Peter completed his Ph.D. with Prof. Touradj Ebrahimi at the Ecole Polytechnique Fédéral de Lausanne (EPFL), Lausanne, Switzerland, 2012.

13:40-14:00

Title: Direct Feedback Alignment based Convolutional Neural Network Training for Low-power Online Learning Processor

Speaker: Donghyeon Han <hdh4797@kaist.ac.kr>

Biography: Donghyeon Han received the B.S. and M.S. degree in electrical engineering from the Korea Advanced Institute of Science and Technology (KAIST), Daejeon, South Korea, in 2017 and 2019, respectively. He is currently pursuing the Ph.D. degree. His current research interests include low-power system-on-chip design, especially focused on deep neural network learning accelerators and hardware-friendly deep learning algorithms.

14:00-14:20

Title: A system-level solution for low-power object detection

Speaker: Fanrong Li <lifanrong2017@ia.ac.cn>

Biography: Fanrong Li is a Ph.D. student at the Institute of Automation, Chinese Academy of Science. His research interests mainly focus on analyzing and designing computer architectures for neural networks as well as developing heterogeneous reconfigurable accelerators.

14:20-14:40

Title: Low-power neural networks for semantic segmentation of satellite images

Speaker: Gaetan Bahl <gaetan.bahl@inria.fr>

Biography: Gaetan is a first year PhD Student at INRIA (French Institute for Research in Computer Science and Automation), funded by IRT Saint-Exupery and working on Deep Learning architectures for onboard satellite image analysis. After receiving his Master's degree from Grenoble INP - Ensimag in 2017, he worked in the photogrammetry industry for a year on digital surface model generation from drone imagery and deep learning for segmentation of 3D urban models.

14:40-15:00

Title: Efficient Single Image Super-Resolution via Hybrid Residual Feature Learning with Compact Back-Projection Network

Speaker: Feiyang Zhu <2418288750@qq.com>

Biography: Feiyang is a graduate student at the College of Computer Science of Sichuan University.

15:20-15:40

Title: Automated multi-stage compression of neural networks

Speaker: Julia Gusak <y.gusak@skoltech.ru>

Biography: Julia received her Master's degree in Mathematics and Ph.D. degree in Probability Theory and Statistics from Lomonosov Moscow State University. Now she is a postdoc at Skolkovo Institute of Science and Technology in the laboratory "Tensor networks and deep learning for applications in data mining", where she closely collaborates with Prof. Ivan Oseledets and Prof. Andrzej Cichocki. Her recent research deals with neural networks compression and acceleration. Also, she is very interested in audio-related problems, in particular, dialogue systems, text to speech and speaker identification tasks.

15:40-16:00

Title: Enriching Variety of Layer-wise Learning Information by Gradient Combination

Speaker: Chien-Yao Wang <x102432003@yahoo.com.tw>

Biography: Chien-Yao Wang received the B.S. degree and PH. D. degree in applied computer science and information engineering from National Central University, Zhongli, Taiwan, in 2013 and 2017. Currently, he is a Postdoctoral Research Scholar in the Institute of Information Science, Academia Sinica. His research interests include signal processing, deep learning, and machine learning. He is an honorary member of Phi Tau Phi Scholastic Honor Society.

16:00-16:20

Title: 512KiB RAM is enough! Live camera face re-identification DNN on MCU

Speaker: Maxim Zemlyanikin <maxim.zemlyanikin@xperience.ai>

Biography: Maxim is a deep learning engineer at Xperience AI. His research interests are image retrieval in various fields (face recognition, person re-identification, visual search in fashion domain) and low-power deep learning (neural network compression techniques, resource-efficient neural network architectures). Maxim received his MSc in Data Mining from the Higher School of Economics in 2019.

16:20-16:40

Title: Real Time Object Detection On Low Power Embedded Platforms

Speaker: George Jose <George.Jose@Harman.com>

Biography: George Jose completed his gradation in Electronics and Communication Engineering from National Institute of Technology, Calicut. He then completed his post graduation at IIT Bombay where he worked on beamforming techniques to improve distant speech recognition using microphone arrays. Currently he is working as Assistant Software Engineer at Harman International. In his 2 years of experience in the software industry, he has worked on a wide range of areas like object detection for ADAS systems, autonomous indoor navigation, adversarial defence mechanisms, speaker identification, keyword detection etc.

Organizers

- Alexander C Berg, Associate Professor, University of North Carolina at Chapel Hill, aberg@cs.unc.edu

- Bo Chen, Software Engineer, Google Inc. bochen@google.com

- Yiran Chen, Associate Professor, Duke University, yiran.chen@duke.edu

- Yen-Kuang Chen, Research Scientist, Alibaba, y.k.chen@ieee.org

- Eui-Young Chung, Professor, Yonsei University, eychung@yonsei.ac.kr

- Svetlana Lazebnik, Associate Professor, University of Illinois, slazebni@illinois.edu

- (contact) Yung-Hsiang Lu, Professor, Purdue University, yunglu@purdue.edu

- Sungroh Yoon, Associate Professor, Seoul National University, sryoon@snu.ac.kr

References

- Y. Lu, A. M. Kadin, A. C. Berg, T. M. Conte, E. P. DeBenedictis, R. Garg, G. Gingade, B. Hoang, Y. Huang, B. Li, J. Liu, W. Liu, H. Mao, J. Peng, T. Tang, E. K. Track, J. Wang, T. Wang, Y. Wang, and J. Yao. Rebooting computing and low-power image recognition challenge. In 2015 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), pages 927–932, Nov 2015.

- K. Gauen, R. Rangan, A. Mohan, Y. Lu, W. Liu, and A. C. Berg. Low-power image recognition challenge. In 2017 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), pages 99–104, Jan 2017.

- K. Gauen, R. Dailey, Y. Lu, E. Park, W. Liu, A. C. Berg, and Y. Chen. Three years of low-power image recognition challenge: Introduction to special session. In 2018 Design, Automation Test in Europe Conference Exhibition (DATE), pages 700–703, March 2018.

- Sergei Alyamkin, Matthew Ardi, Achille Brighton, Alexander C. Berg, Yiran Chen, Hsin-Pai Cheng, Bo Chen, Zichen Fan, Chen Feng, Bo Fu, Kent Gauen, Jongkook Go, Alexander Goncharenko, Xuyang Guo, Hong Hanh Nguyen, Andrew Howard, Yuanjun Huang, Donghyun Kang, Jaeyoun Kim, Alexander Kondratyev, Seungjae Lee, Suwoong Lee, Junhyeok Lee, Zhiyu Liang, Xin Liu, Juzheng Liu, Zichao Li, Yang Lu, Yung-Hsiang Lu, Deeptanshu Malik, Eun-byung Park, Denis Repin, Tao Sheng, Liang Shen, Fei Sun, David Svitov, George K. Thiruvathukal, Baiwu Zhang, Jingchi Zhang, Xiaopeng Zhang, and Shaojie Zhuo. 2018 low-power image recognition challenge. CoRR, abs/1810.01732, 2018.

- Yung-Hsiang Lu, Alexander C. Berg, and Yiran Chen. Low-power image recognition challenge. AI Magazine, 39(2), Summer 2018.